So I need multiple macros to be able to run simultaneously. Is there any way to do that? Right now starting a new one while another is running terminates the other. I tried setting them to all different kinds of priorities to no avail.

So I need multiple macros to be able to run simultaneously. Is there any way to do that? Right now starting a new one while another is running terminates the other. I tried setting them to all different kinds of priorities to no avail.

Reading though this list of posts it seems like you are trying to use/create external software to control eos to not use the built in effects package but control channels independently but also record that back into the eos somehow.......

Not really sure why you would want to do this other than curiosity.

You are coming at this from a coding background but console programming comes from a design background and historic theater. You may be able to do more of what you are looking for using OSC but it seems like you are hung up on using macros. Eos does not have a very advanced macro language yet. It has been discussed and considered over the years but there are many other priorities in the development of this console line.

The effects in the video you posted can be achieved with the features already in the console without macrotizing.

If you can give a good explanation of what result you are looking for rather than the actions you want to take to get the result we can help you with the current software that is available to achieve those results.

If you just want to code and ignore what the software is built to do you may want to look at the MA line of consoles as they are more open to 3rd party enhancement through LUA scripts and have a more advanced macro engine in them.

Lighting consoles are tools and finding the right tool for you and your job is important.

I love programming on Eos consoles but have also learned how to program other systems and use that knowledge to expand my use of Eos.

This is not meant to offend just trying to help and understand what you are trying to achieve.

Andrew Webberley

Yea I have also been following the past few post wondering when the reason for all the needs for work arounds was coming as well. Just trying to make his software do what he wants instead of learning how to use the console to get what he wants.

This sweep forward and backward is so simple if you use the console how it is intended to be used.

chan X thru chan Y at # delay 0 thru timeA, record cue #

select last offset reverse at 0 delay 0 thru timeB, record cue next.

Set timecode events to trigger cues (my favorite method is export markers from video editor, import csv list)

Sure, if you like geeky techno syntax. But not if you prefer organic artistic flow.

Cameron, you hit the nail on the head... basically Eos doesn't use a Bender like workflow (that he is accustomed to), and doesn't want to wrap his head around conventional theatrical cue stacks. No problem, there are more than one way to program lights and many vendors take different approaches.

I know several people who have written their own lighting software, some that do a timeline like his approach. I did a bunch of code maintenance, patching and feature development for one of them that was commercially available and even deployed at a major show in Vegas. But its largest weakness was the fade engine, didn't scale as necessary and had to be virtually re-written, and they're complex beasts, especially to be performant.

However, no other system that I know of has gone to the point of basically creating a whole new software that makes two other systems (Blender and Eos) do things they were not intended to do, simply because he doesn't want to go the extra step to make a fade engine and have his own actual lighting software... Its one thing to have software that adds functionality to what Eos is doing at its core, but its another to expect Eos to change fundamentally how things work so that you can work around half of the system because you don't understand how it is supposed to work.

This is evident by his initial post here, in the Eos syntax you'd never use delay in that sort of way. There are a handful of ways, but I think many of us would simply use a follow cue, and be done with it. A very conventional way, instead of trying to send two macros simultaneously, which have commands that contraction one another. Instead of putting the timing onus on his software to simply send the command when he wants them to execute.

The reason it's not DMX software is because why would you want to delete the strengths of Eos? It's a team effort. It allows Eos to only do what Eos is the very best at, it allows Blender to do what Blender is really good at, and it allows Sorcerer, my software, to combine them seamlessly with automation and a user-centric UX designed for middle school theatre.

This is not designed for professional lighting console programmers. It's designed for nontechnical people with ADHD who suffer at the hand of Eos's gaping weaknesses, who have to use Eos anyway because of its strengths. It's for people who want to be able to just focus on art without all the technicals, without having to delete the theater's console.

Why would I design yet another free dmx sequence based software when I can't compete against what Eos does best? I can't compete against its industry proliferation and with its FOH reliability and hardware. So I'm not. I'm adding to it.

And my complaint above was an honest question about a problem that only someone working on the type of project I'm working on would ever have. Yes, I now understand why the limitation is there. I do think a more OOP approach would ultimately be more powerful for Eos, but whatever. It's no big deal. I've already solved this problem on my end.

But its largest weakness was the fade engine, didn't scale as necessary and had to be virtually re-written, and they're complex beasts, especially to be performant.

This is pretty interesting. What was the most difficult part of that and how is it most commonly solved? Coming from Python, which is thread locked, I've never even ventured towards conceptualizing how that might work.

But it seems like it wouldn't be that different from how my harmonizer system works in Sorcerer. The problem in Sorcerer is that on frame change, you don't want to spam the console with contradictory commands coming from disagreeing controllers. So on frame_change_pre, Sorcerer runs all the updaters on all the properties that have changed from last frame. Those updaters add their requests to a list of requests. Then, on frame_change_post, the publisher takes all those requests, harmonizes them, simplifies them, completes them to make them make sense to the lighting console, and sends them off to the lighting console. And to keep all these change requests in a universal, itemized format that the harmonizer can modify as needed downstream, you have this internal CPVIA format for change_requests, which is a (channel, parameter, value, influence, argument) tuple.

So I would imagine that a fade engine could also make use of a CPVIA like protocol. It might look something like (channel, parameter, value, speed, completion_percentage, interpolation_type). And you would increment the completion_percentage argument on each pass of the timer. And the timer uses interpolation_type and speed on each iteration to determine what to publish and what the new completion_percentage should be. But like with Sorcerer's harmonizer, you don't immediately publish. On each timer iteration you wait for everyone to speak in case there are conflicts, and then you harmonize/simplify, and then you publish to the DMX system. That way it scales up.

Instead of putting the timing onus on his software to simply send the command when he wants them to execute.

My software works just fine, the point was to get all the logic onto the console for 100% of effects so that my software can be shut down and have everything run just from the console without having to rely on my software during the final show. Multi-modal playback. This little complaint was just an annoying tis-but-a-scratch that I've already solved on my end. Just made my job a lot harder, that's all. No big deal.

But its largest weakness was the fade engine, didn't scale as necessary and had to be virtually re-written, and they're complex beasts, especially to be performant.

This is pretty interesting. What was the most difficult part of that and how is it most commonly solved? Coming from Python, which is thread locked, I've never even ventured towards conceptualizing how that might work.

But it seems like it wouldn't be that different from how my harmonizer system works in Sorcerer. The problem in Sorcerer is that on frame change, you don't want to spam the console with contradictory commands coming from disagreeing controllers. So on frame_change_pre, Sorcerer runs all the updaters on all the properties that have changed from last frame. Those updaters add their requests to a list of requests. Then, on frame_change_post, the publisher takes all those requests, harmonizes them, simplifies them, completes them to make them make sense to the lighting console, and sends them off to the lighting console. And to keep all these change requests in a universal, itemized format that the harmonizer can modify as needed downstream, you have this internal CPVIA format for change_requests, which is a (channel, parameter, value, influence, argument) tuple.

So I would imagine that a fade engine could also make use of a CPVIA like protocol. It might look something like (channel, parameter, value, speed, completion_percentage, interpolation_type). And you would increment the completion_percentage argument on each pass of the timer. And the timer uses interpolation_type and speed on each iteration to determine what to publish and what the new completion_percentage should be. But like with Sorcerer's harmonizer, you don't immediately publish. On each timer iteration you wait for everyone to speak in case there are conflicts, and then you harmonize/simplify, and then you publish to the DMX system. That way it scales up.

Instead of putting the timing onus on his software to simply send the command when he wants them to execute.

My software works just fine, the point was to get all the logic onto the console for 100% of effects so that my software can be shut down and have everything run just from the console without having to rely on my software during the final show. Multi-modal playback. This little complaint was just an annoying tis-but-a-scratch that I've already solved on my end. Just made my job a lot harder, that's all. No big deal.

Re: Fade engine --- this software which I did maintenance on, but I didn't originally write was in VB.net 4.6.2 - and had some significant limitations. (It is now at version 8.0) So everything done on the user programming end had to work around a very convoluted fade engine, that really should have been rewritten. But there was no money behind completely redoing the software into C++ or even upgrading the codebase. It was really just a software we had one venue and we were lucky enough to have a close enough relationship with the software developer that I received access to the codebase.

The complexity of a fade engine really starts to show up when you permit multiple things to be pushing conflicting commands to the same channel (ie a cue with a slow focus move while a ballyhoo effect is running, and then I throw in a fader/submaster with a higher priority position and another submaster with a lower priority positon for a fail safe background state -- and then ask the question, where should the fixture be at this fractional second). At the end of the day it's basically "just math" but having the correct logic to be performant to make sure that 48k parameters which can be controlled by multiple layers of command, all without slowing down the clock is harder. And when you run into threading/concurrency/race-conditions, how are you handling that. Because an engine of this size just cannot (likely) run single-threaded. When it was an Ion 4000 you could earlier do a thread for fade, another one for IO and another one for UI and get away with it.

Re: playback -- I think if your plan is to use macros to accomplish the timings that should be done in the cue list, you're going to run into a LOT of problems there. The macro system is (for the most part) just that a macro system to replay what a real person would do -- often (but not exclusively) from the programming, not operational state. Sure there might be a macro to fade up house lights in 10 seconds, or flash the house lights because the show is about to start, but the overall timing of the cue list is where all of the complicated timings should happen. Consider how instead of writing macros and complex effects that are being used during playback (I can see a lot of negative performance implications possibly), that instead your system should instead translate your visual programmer into writing actual properly constructed cues instead of reinventing the process.

I do see value in having a tool where you can visually see your audio file and then have markers that trigger something like blinder strobes or whatever, that you can drag and drop across a timeline. Another major console maker has something similar. However, I would suggest that once you get a finished product, that at the end of the day, it simply creates cues that are timecode, which are easier to update and manipulated and not abstracted behind macros which have no meaning by themselves apart from the one set of cues they were created for.

Consider how instead of writing macros and complex effects that are being used during playback (I can see a lot of negative performance implications possibly), that instead your system should instead translate your visual programmer into writing actual properly constructed cues instead of reinventing the process.

The way I think about this is do you want to do timecoded lighting shows using an Excel spreadsheet or by using a video editor? Most people would say video editor, since you're talking about a 1 dimensional versus 2 dimensional layout. Cue stacks abstract the x axis (time) to a float value while a video editor visualizes it intuitively.

When you compare cue-based system to keyframe-based system, you find that cues are the only thing you can use for human initiated events. But if all events in a contained sequence can be initiated by a pre-determined clock, you can use keyframes instead. Keyframes are significantly easier to work with than cues for more complex tasks. That's why they use keyframes, not cues, to make movies like Frozen and Wall-E.

So my software makes it super easy to combine keyframe workflows with cue workflows. For instance, you can make a light blink out SOS "dit dit dit dahhhh dahhhh dahhhh dit dit dit" in under 60 seconds with my software and have it just run on the console by itself with a macro or with a cue.

I think if your plan is to use macros to accomplish the timings that should be done in the cue list, you're going to run into a LOT of problems there.

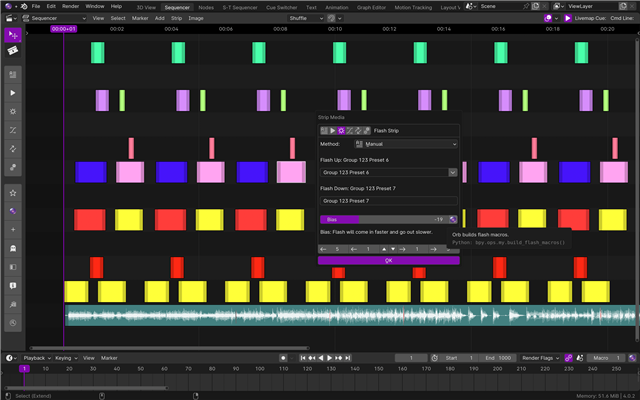

I wouldn't call it a plan, per se, since this technology has been out and open-sourced for many months now: github.com/.../Sorcerer. It works pretty darn well. The key is that we bind the macros to event-list events. Something like a flash strip in the sequencer will have a start macro that says something like, "Chan 1 at Full Sneak Time .5 Enter" and its down flash will be "Chan 1 at 0 Sneak Time .5 Enter", and then it automatically binds that to the event list based on the strips's position in the sequencer when you hit Shift+Spacebar.

I don't use a cue-based workflow for such things because cues just don't make sense. I use them for qmeos but not for sequence-based effects. Consider this setup for example:

You can't really express that with cues. I mean you could, but it would be kind of ridiculous. Cues simply are not the right tool for thee job. Instead, it just automatically adds simple macros to the event list. Like bumps.

The complexity of a fade engine really starts to show up when you permit multiple things to be pushing conflicting commands to the same channel

Preach. Yeah, this was a pretty fun problem on Sorcerer too. It was the reason I recently rewrote my entire codebase (about 20,000 loc). It's pretty fun figure out how to do this in a maintainable, readable way.

But the good thing is my software is designed to bog down. It's allowed to. It doesn't have to be fast. It's a renderer, not a real-time playback FOH system. You render its stuff onto the console and the console does all the heavy lifting for the final show. Sorcerer can render out its animations one frame per second if you need it to. Then when you play it back on the console, it's buttery smooth.

It's definitely not designed for 48k parameters though, not at once. But you can chunk it out and render each section individually if you really need to. The workflow is pretty similar to 3D animation where you only see the bare bones minimum during the entire animation process, and then only at the very end do you put everything together to make it look pretty. Like how animators work in Object mode where the models are nude, bald, and nothing is textured/colored/materialed.

I don't think any one here is saying there isn't value to your concept of a timeline based editor for EOS. I think what is being said is the concept that EOS computer programmers changing their program to cooperate with your program is a a little to far of a stretch for this forum.

It might better be explored in a different way. Asking the forum moderators to add a third party Dev forum section may be a good approach. This way all of your specific questions related to your program do not dilute the questions about EOS family itself.

I think what is being said is the concept that EOS computer programmers changing their program to cooperate with your program is a a little to far of a stretch for this forum.

That was a minor gripe that's million miles in the past now. Doesn't matter.

I am speaking about for your future posts, so I still think its relevant.

www.etcconnect.com